Seeing to Learn (S2l) : Observational learning of robotic manipulation tasks (Oct 2017 – Sep 2020)

Seeing to learn (S2l) project aims to develop observational learning approaches for robotic systems. It addresses the inability of current systems to learn new tasks solely from third person demonstrations, directly or from online videos. The project envisions a future, where robots equipped with observational learning can aquire new skills and abilities like human beings from demonstrations. [website] [paper] [slides]

Self-Repairing Cities (Oct 2016 – Sep 2017)

'Self Repairing Cities' is a 5-year EPSRC granted £4.2m project aimed at exploring the use of robots and autonomous systems in city infrastructure. The team is formed of a consortium of the Universities of Leeds (lead), Birmingham, UCL and Southampton.

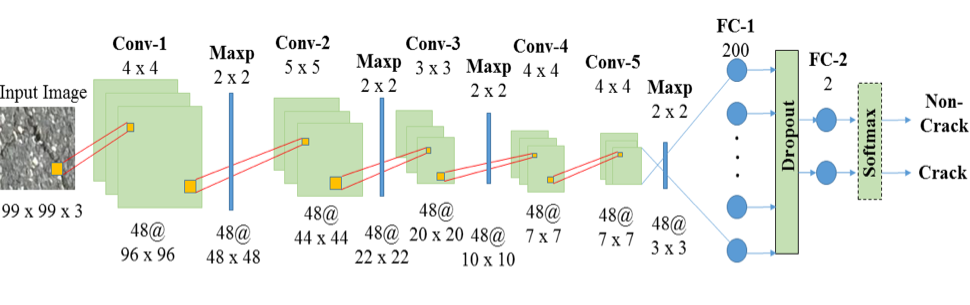

My role in the project was to explore the use of deep learning based computer vision methods for crack detection in pavement images. A special kind of neural networks called convolutional neural networks (CNNs) was used for this. The convolutional layers in the CNN were able to automatically extract relevant visual features from pavement images for detecting cracks by using its multi layered hierarchal network architecture. These features were further used by the fully connected layers in the CNN to effectively classify the input pavement image into cracked and non-cracked categories. The results of this work was published in International conference for Automation and Robotics in Construction (ISARC) 2017.

[website]

[view paper]

[view code]

My role in the project was to explore the use of deep learning based computer vision methods for crack detection in pavement images. A special kind of neural networks called convolutional neural networks (CNNs) was used for this. The convolutional layers in the CNN were able to automatically extract relevant visual features from pavement images for detecting cracks by using its multi layered hierarchal network architecture. These features were further used by the fully connected layers in the CNN to effectively classify the input pavement image into cracked and non-cracked categories. The results of this work was published in International conference for Automation and Robotics in Construction (ISARC) 2017.

[website]

[view paper]

[view code]

Computer Vision Based System Drowsiness Detection System (Aug 2015 – May 2016)

The aim of the project was to develop a computer vision system for drowsiness detection. The developed system uses a ordinary web camera and uses a computer vision algorithm to detect whether the subject is drowsy or active.The first stage of the developed system is a tracking algorithm that accurately tracks human eyes in the video of the subject taken using a normal consumer grade camera. Then it determines whether the eyes are closed or open using a combination of the HOG features and SVM classifiers. After this the system calculates the PERCLOS (time for which eyes were closed in 1 min) value. So if the value is above a particular threshold then the person is drowsy else is active. [blog] [paper 1] [paper 2] [paper 3] [view code]

CAMbot : Customer Assistance Mobile Manupulator Robot (Jul 2014 – Apr 2015)

The project develops a Customer Assistance Mobile Manipulator Robot that can be used in super markets and big shopping malls .It assists customers by recommending products by facial, gender and emotion recognition using image processing techniques, finding the required products with the help of a user interface developed in C# , identifying commodities in shelves using a RFID tagging system, helping the customers to navigate through the store using buidin maps stored in the robotic memory and helping them to pick and place the commodities using its robotic arm. The project has an interdisciplinary nature and includes Image processing (Robotic vision), embedded system (Microcontrollers), Electrical components (Motors, Servo and drivers), Mechanical design and kinematics (Load- Motor torque relation & robotic arm), Electronic circuits (power supply circuits) and programming (C#, Matlab, python &embedded C). [website] [paper 1] [paper 2] [paper 3] [code]

Automatic sorting and grading system for mangos (May 2014 – Dec 2014)

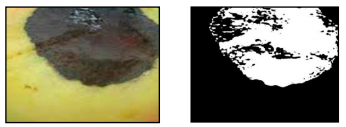

The project was aimed at developing an automatic system for sorting and grading of mangos based on computer vision algorithms using a robotic arm. The application of this system is aimed to replace the existing manual based technique of sorting and grading used in India. The developed system speeds up the entire process considerably and is more accurate and efficient than the traditional techniques used.

The project was funded under Technical Education Improvement Programme (TEQIP) Phase II , CUSAT.

[code]

[paper]

[dataset]

Handwritten Digit Recognition System for South Indian Languages (Sept 2013 - Dec 2014)

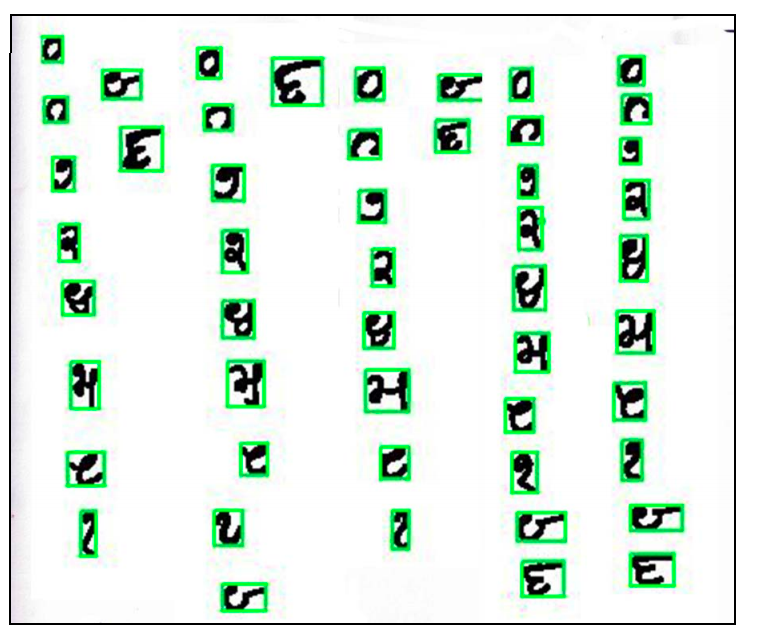

We developed a novel approach for recognition of handwritten digits for South Indian languages using artificial neural networks (ANN) and Histogram of Oriented Gradients (HOG) features. The images of documents containing the hand written digits are optically scanned and are segmented into individual images of isolated digits. HOG features are then extracted from these images and applied to the ANN for recognition. The system recognises the digits with an overall accuracy of 83.4%.

[paper]